Errors in Thinking

Cognitive Errors, Wishful Thinking and Sacred Truths

https://www.humantruth.info/thinking_errors.html

By Vexen Crabtree 2022

We all suffer from systematic thinking errors1,2 which fall into three main types: (1) internal cognitive errors; (2) errors of emotion3, perception and memory; and (3) social errors that result from the way we communicate ideas and the effects of traditions and dogmas. Some of the most common errors are the misperception of random events as evidence that backs up our beliefs, the habitual overlooking of contradictory data, our expectations and current beliefs actively changing our memories and our perceptions and using assumptions to fill-in unknown information. These occur naturally and subconsciously even when we are trying to be truthful and honest. Many of these errors arise because our brains are highly efficient (rather than accurate) and we are applying evolutionarily developed cognitive rules of thumb to the complexities of life4,5. We will fly into defensive and heated arguments at the mere suggestion that our memory is faulty, and yet memory is infamously unreliable and prone to subconscious inventions. They say "few things are more dangerous to critical thinking than to take perception and memory at face value"6.

We were never meant to be the cool, rational and logical computers that we pretend to be. Unfortunately, and we find it hard to admit this to ourselves, many of our beliefs are held because they're comforting or simple7. In an overwhelming world, simplicity lets us get a grip. Human thinking errors can lead individuals, or whole communities, to come to explain types of events and experiences in fantastical ways. Before we can guard comprehensively against such cumulative errors, we need to learn the ways in which our brains can misguide us - lack of knowledge of these sources of confusion lead many astray8.

Learning to think skeptically and carefully and to recognize that our very experiences and perceptions can be coloured by societal and subconscious factors should help us to maintain impartiality. Beliefs should not be taken lightly, and evidence should be cross-checked. This especially applies to "common-sense" facts that we learn from others by word of mouth and to traditional knowledge. Above all, however, our most important tool is knowing what types of cognitive errors we, as a species, are prone to making.

“Unless we recognize these sources of systematic distortion and make sufficient adjustments for them, we will surely end up believing some things that just aren't so.”

Thomas Gilovich (1991)9

- Cognitive and Internal Errors

- Pareidolia: Seeing Patterns That Aren't There

- Selection Bias and Confirmation Bias

- The Forer (or Barnum) Effect

- Wishful Thinking

- Blinded by the Small Picture

- We Dislike Changing Our Minds: Status Quo Bias and Cognitive Dissonance

- Physiological Causes of Strange Experiences

- Emotions: The Secret and Honest Banes of Rationalism

- Mirrors That We Thought Were Windows

- Social Errors

- The False and Conflicting Experiences of Mankind

- Commentaries

- The Prevention of Errors: Science and Skepticism

- Links

1. Cognitive and Internal Errors

Many errors in thinking do not come from the fact that we don't know enough. Sometimes, we do. But most the time our brains fill in the gaps, and unfortunately the way our brains put together partial evidence results in potentially false conclusions. These systematic problems with the way we think are called cognitive errors, and they occur below the level of consciousness. Much research has been done in this area, and continues. "The research is painting a broad new understanding of how the mind works. Contrary to the conventional notion that people absorb information in a deliberate manner, the studies show that the brain uses subconscious "rules of thumb" that can bias it into thinking that false information is true"10. These cognitive errors and rules of thumb are discussed on this page. Thomas Gilovich published a book concentrating on the subtle mistakes made in everyday thinking, and introduces the subject like this:

“People do not hold questionable beliefs simply because they have not been exposed to the relevant evidence [...] nor do people hold questionable beliefs simply because they are stupid or gullible. [... They] derive primarily from the misapplication or overutilization of generally valid and effective strategies for knowing. [... Many] have purely cognitive origins, and can be traced to imperfections in our capacities to process information and draw conclusions. [...] They are the products, not of irrationality, but of flawed rationality.”

"How We Know What Isn't So: The Fallibility of Human Reason in Everyday Life"

Thomas Gilovich (1991)11

“Research psychologists have for a long time explored the mind's impressive capacity for processing information. We have an enormous capacity for automatic, efficient, intuitive thinking. Our cognitive efficiency, though generally adaptive, comes at the price of occasional error. Since we are generally unaware of these errors entering our thinking, it can pay us to identify ways in which we form and sustain false beliefs.”

"Social Psychology" by David Myers (1999)5

1.1. Pareidolia: Seeing Patterns That Aren't There

#apophenia #pareidolia #thinking_errors

The cognitive process of seeing patterns in ambiguous data is called apophenia. When it happens with images or sound, it is pareidolia. They're common 'errors in perception'12. The cause is partly biological. A part of our brain, the fusiform face area, actively looks for shapes, lines or features that might possibly be a human face. It does so devoid of context, and reports with urgency and confidence when it thinks it has results. That's why most pareidolia involves the perception of human forms in messy visual data. It "explains why some people see a face on Mars or the man in the moon [... or] the image of Mother Teresa in a cinnamon bun, or the Virgin Mary in the bark of a tree"13. Auditory pareidolia is responsible for when we mistake a whistling breeze for a whispering human voice. When people listen with expectation of hearing a voice they will hear spoken words in pure noise14.

We often misperceive random events in a way that supports our own already-existing beliefs or hunches15 and it goes against our feeling of common-sense to even consider our perception wrong. Psychologist Jonah Lehrer says "the world is more random than we can imagine. That's what our emotions can't understand"16. The tendency for people to see more order in nature than there is was noted as long ago as the thirteenth century by Roger Bacon - he called such errors due to human nature the 'idols of the tribe'17. To study pareidolia sociologists have presented true sets of random results and analysed subject's responses to them. Coin flips, dice throws and card deals have all revealed that we are naturally prone to spotting illusory trends18. Pareidolia results in superstitions, magical thinking, ghost and alien sightings, 'Bible codes', pseudo-science and beliefs in all kinds of religious, nonsensical and supernatural things18,19,20.21

For more, see:

1.2. Selection Bias and Confirmation Bias

“It is the peculiar and perpetual error of the human understanding to be more moved and excited by affirmatives than negatives.”

Francis Bacon (1620)22

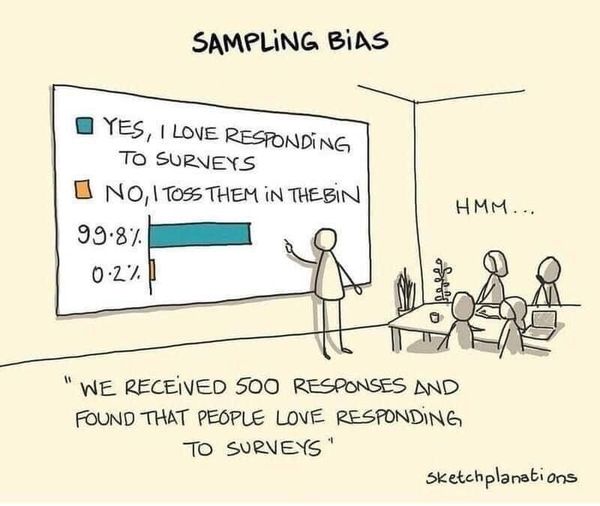

Selection Bias is the result of poor sampling techniques23 whereby we use partial and skewed data in order to back up our beliefs. It includes confirmation bias which is the unfortunate way in which us Humans tend to notice evidence that supports our ideas and we tend to ignore evidence that contradictions it24,25 and actively seek out flaws in evidence that contradicts our opinion (but automatically accept evidence that supports us)26,27. We are all pretty hard-working when it comes to finding arguments that support us, and when we find opposing views, we work hard to discredit them. But we don't work hard to discredit those who agree with us. In other words, we work at undermining sources of information that sit uncomfortably with what we believe, and we idly accept those who agree with us.

All of this is predictable enough for an individual, but another form of Selection Bias occurs in mass media publications too. Cheap tabloid newspapers publish every report that shows foreigners in a bad light, or shows that crime is bad, or that something-or-other is eroding proper morality. And they mostly avoid any reports that say the opposite, because such assuring results do not sell as well. Newspapers as a whole simply never report anything boring - therefore they constantly support world-views that are divorced from everyday reality. Sometimes commercial interests skew the evidence that we are exposed to - drugs companies conduct many pseudo-scientific trials of their products but their choice of what to publish is manipulative - studies in 2001 and 2002 shows that "those with positive outcomes were nearly five times as likely to be published as those that were negative"28. Interested companies just have to keep paying for studies to be done, waiting for a misleading positive result, and then publish it and make it the basis of an advertising campaign. The public have few resources to overcome this kind of orchestrated Selection Bias.

RationalWiki uses mentions that "a website devoted to preventing harassment of women unsurprisingly concluded that nearly all women were victims of harassment at some point"29. And another example - a statistic that is most famous for being wrong, is that one in ten males is homosexual. This was based on a poll done on the community surrounding a gay-friendly publication - the sample of respondents was skewed away from the true average, hence, misleading data was observed.

The only solution for these problems is the proper and balanced statistical analysis, after actively and methodically gathering data. Alongside raising awareness of Selection Bias, Confirmation Bias, and other thinking errors. This level of critical thinking is quite a rare endeavour in anyone's personal life - we don't have time, the inclination, the confidence, or the skill, to properly evaluate subjective data. Unfortunately fact-checking is also rare in mass media publications. Personal opinions and news outlets ought to be given little trust.

For more, see:

1.3. The Forer (or Barnum) Effect

#astrology #barnum_effect #bertram_forer #cold_reading #forer_effect #horoscopes #pseudoscience #psychology #thinking_errors

The Forer effect is the seeing of a personality statement as "valid even though it could apply to anyone", and is named after the psychologist who famously demonstrated it30. In 1949, Bertram Forer conducted a personality test, and then gave all of his students exactly the same personality profile, which he constructed from random horoscopes. The students rated the accuracy of their profile at over 80% on average31! This was occasionally previously known as the Barnum effect after a popularist entertainer. Extensive studies have found that this effect applies well to horoscopes, other astrological readings, messages given from the dead by spiritualists, various cold reading tricks and other profiling endeavours32. It is often mistaken for being a product of magical or supernatural means.

For more, see:

1.4. Wishful Thinking 33

#forer_effect #psychology #selection_bias #thinking_errors

The rather common-sense assertion that we tend to believe what we want to believe is in fact backed up by a large amount of empirical evidence34, not only in terms of our beliefs about ourselves, but also effecting our beliefs about the world35.

Although more generic, this may be the driving impulse behind both Selection Bias and the Forer Effect discussed above. All three can be combatted with the "simple" method of detecting when we want something to be true and being stricter with ourselves in investigating opposing theories and ideas more openly and honestly. Of course, our secret is... we don't want to do that. And hence, wishful thinking is a strong contender for the most powerful driver of delusion and error.

To truly understand this cause of skew, we need to understand why we want the things we do. Answering that question requires a long and slow drive down the avenues of both philosophy and neurology. At the very least, we can acknowledge that all of us are, often consciously, intentionally masking problems and doubts in order to promote the things we want to be true.

1.5. Blinded by the Small Picture

“A psychologist [Dr Hecke] argues that much what we label "stupidity" can be better explained as blind spots. She draws on research in creativity, cognitive psychology, critical thinking, child development, education, and philosophy to show how we (all of us, including the smartest people) create the very blind spots that become our worst liabilities. She devotes a chapter to each of ten blind spots, among them: not stopping to think, my-side bias, jumping to conclusions, fuzzy evidence, and missing the big picture.”

Skeptical Inquirer (2007)36

Much of what Hecke argues is from the way we examine the data surrounding events. We tend to concentrate on the coincidences and specifics of the case in question, and miss out all the surrounding facts and figures. Without taking disconfirmation (as seen above) into account, the coincidences that we notice can become misleading.

1.6. We Dislike Changing Our Minds: Status Quo Bias and Cognitive Dissonance37

#beliefs #cognitive_dissonance #psychology #status_quo_bias #thinking_errors

We don't like changing our minds38. Especially once we have put conscious time and effort into a belief, or it becomes emotional, it's difficult to fairly evaluate contrary evidence against it39,40,41. We often stick with the beliefs we first encounter: very few change their religion42,43. This is called belief perseverance38,44 and status quo bias, noted as long ago as Aristotle in the 4th century BCE45.

When we do recognize that new evidence contradicts us, or even that our own valued beliefs contradict one other, we experience cognitive dissonance46. Even then, we can go to extreme lengths to avoid giving up a belief even when we suspect we've made a mistake47,48 and we often defend beliefs even when there is evidence against them47,48,40.

Some academics warn that intelligence can sometimes work against us because we can find it easy to dismiss evidence and contradictions through the use of clever arguments and manipulations49. We don't have the humility to admit that we're wrong50 and when we do change our minds, we often deny it (even to ourselves38) and to others. It is very difficult to be unbiased and open in our approach to truth, which is why formalized schemes such as The Scientific Method are so important51.

For more, see:

1.7. Physiological Causes of Strange Experiences

If our biochemical brains and physiology sometimes go haywire but we are not aware of a dysfunction, we are apt to misinterpret the events. So, our expectations, culture and abstract thinking all conspire to present to us a false experience. Some medical conditions can cause these to be recurring and frequent occurrences. Through studying these science has come to understand such experiences as out of body experiences, possession, night time terrors, and through more simple biological knowledge have understood the Incubus and Succubus, and many other superstitious beliefs. Physical problems with the eyes can cause wild effects such as seeing blood run up walls, dust storms, clouds, and haloes that are not really there52. Isolated fits and seizures can cause bizarre effects such as odd lights, feelings, smells, auras and déjá vu that can be mild and subtle53.

Sleep Paralysis

“Sleep paralysis, or more properly, sleep paralysis with hypnagogic and hypnopompic hallucinations have been singled out as a particularly likely source of beliefs concerning not only alien abductions, but all manner of beliefs in alternative realities and otherworldly creatures. Sleep paralysis is a condition in which someone, most often lying in a supine position, about to drop off to sleep, or just upon waking from sleep realizes that s/he is unable to move, or speak, or cry out. This may last a few seconds or several moments, occasionally longer. People frequently report feeling a "presence" that is often described as malevolent, threatening, or evil. An intense sense of dread and terror is very common. The presence is likely to be vaguely felt or sensed just out of sight but thought to be watching or monitoring, often with intense interest, sometimes standing by, or sitting on, the bed. On some occasions the presence may attack, strangling and exerting crushing pressure on the chest. People also report auditory, visual, proprioceptive, and tactile hallucinations, as well as floating sensations and out-of-body experiences (Hufford, 1982). These various sensory experiences have been referred to collectively as hypnagogic and hypnopompic experiences (HHEs). People frequently try, unsuccessfully, to cry out. After seconds or minutes one feels suddenly released from the paralysis, but may be left with a lingering anxiety. Extreme effort to move may even produce phantom movements in which there is proprioceptive feedback of movement that conflicts with visual disconfirmation of any movement of the limb. People may also report severe pain in the limbs when trying to move them. [...] A few people may have very elaborate experiences almost nightly (or many times in a night) for years. Aside from many of the very disturbing features of the experience itself (described in succeeding sections) the phenomenon is quite benign.”

"Sleep Paralysis and associated hypnagogic and hypnopompic experiences"

There are many other physiological causes of strange experiences of course, and recent experiments and investigations have allowed exciting results to shed light on the inner working of the Human brain. In particular, a series of experiments have resulted in a deeper understanding of why people experience the idea of god, and these I have put on a separate page: "Experiences of God are Illusions, Derived from Malfunctioning Psychological Processes" by Vexen Crabtree (2002).

1.8. Emotions: The Secret and Honest Banes of Rationalism

#emotions #instincts #psychology #thinking_errors

We all know that emotions can get in the way of sensible thinking: our decisions and beliefs are influenced unduly by emotional reactions that often make little sense3. We jump to instinctive conclusions that are not warranted to a rational appraisal of the evidence, and we only seek disconfirming evidence for opinions that we don't like. We are compelled to dismiss doubts and dismiss evidence that goes against our train of thought if our emotional intuitions continue to drive us54. With academic understatement, one psychologist states "we are not cool computing machines, we are emotional creatures [and] our moods infuse our judgments"55 and another states "some beliefs are formed based primarily upon an emotional evaluation"56. In public we heartily deny that our emotions influence our formal decisions, especially in non-trivial or non-social matters. We do the same with our private thoughts; when debating with ourselves we often falsely "construe our feelings intellectually"57. There's got to be a better way! Many argue that logical thinking requires the suppression of emotion58. Should we strive to be like the Vulcans from Star Trek? Psychologists are saying that this isn't for the best. Our emotions are experts at interpreting social situations and predicting other people. Behind the scenes, our brains take many things into account that we don't consciously notice. So in many situations (especially those involving living beings) our emotional reactions are more well thought-out than intellectual alternatives5. We have to learn to be wise and to learn when our feelings are working to our advantage, and when they are merely getting in the way.4,25

For more, see:

2. Mirrors That We Thought Were Windows

2.1. Perception is Influenced By Expectation: You Can't Always Trust Your Senses

#epistemology #expectation #experiences #memories #perception #psychology #thinking_errors

Our expectations and our interpretation of sensory input form part of how we perceive the world. We don't just passively accept sensory information, we construct a synthesis between that and what we think we should be seeing59,60. The very things we see and hear, clear as daylight and right in front of our eyes, can be falsehoods caused by expectation61. We trust our senses far too much; we will fly into defensive and heated arguments at the mere suggestion that we saw something wrongly, and yet our Human faculties are unreliable and very prone to such invention. They say "few things are more dangerous to critical thinking than to take perception and memory at face value"62. One fascinating aspect of this is our enjoyment of drinks. For example studies have shown consistently that when people don't know which wine they are drinking they tend to enjoy the cheapest ones most, as judged by their taste, texture and smell. But if you add packaging then they rate the wines that are most expensive to be the best63. The effects of expectation are not always trivial however, and police psychologists such as Elizabeth Loftus have spent their lives educating investigators on the power of expectation over our senses. You simply cannot blindly trust what you see.

For more, see:

- "Perception is Influenced By Expectation: You Can't Always Trust Your Senses" by Vexen Crabtree (2016).

2.2. What Every Lover Knows: Memory is Actively Interpreted

#memories #perception #subjectivism #thinking_errors

Our memories change over time and frequently they are simply wrong64,65. But most of us tend to think of memories as accurate accounts65. Extensive studies have shown that our memories of events are active interpretations of the past rather than picture-perfect records of it66,67. The best way to avoid errors is to write things down as early as possible65. Our recall of the past is affected by our present expectations and by our current knowledge and state of mind68. We tend to suppress and alter memories that damage our self-esteem65,69. Psychologists such as Elizabeth Loftus have shown through repeated experiments that simply by asking people questions about what they think they saw or heard previously, you make their subconscious whir into a creative drive in order to answer the question, even if it means making up details, and allowing assumptions and feelings to silently trick our minds into inventing elements of memories. Memories that are full of details give us a great sense of confidence, but, such memories are just as likely to contain accidental fabrications, many errors, and a great number of "filled-in" details which we simply subconsciously invented.

For more, see:

2.3. Our Attempts to be Logical70

In analysing the many factors that influence human behaviour, the economist Joseph Schumpeter wrote that we "plan too much and always think too little"71; we often run with our existing thoughts, perceived facts and current ideas, and simulate and plan future events based on them. Our plans are often very clever, but we miss out on the primary, necessary first steps: Thinking quietly and deeply about various eventualities and possibilities.

The cutting philosopher, Friedrich Nietzsche, says that even amongst those who do try to think widely and freshly, they merely delude themselves: "the greater part of the conscious thinking of a philosopher is secretly influenced by his instincts"72. We defend those ideas, and think of them, as purely rational constructions.

“In the end, many of us will come to identify good thinking with confirming our biases. We will be pleased with ourselves as we find that the more we learn the more we learn we were right all along!”

"Unnatural Acts: Critical Thinking, Skepticism, and Science Exposed!" by Robert Todd Carroll (2011)73

“There is good scientific evidence to support the notion that being really intelligent and knowledgeable can be a disadvantage to some thinkers because of the increased ability to come up with rationalizations in defense of a position one originally adopted. [...] The smarter one is, the easier it is to explain away strong evidence contrary to one's beliefs.”

"Unnatural Acts: Critical Thinking, Skepticism, and Science Exposed!" by Robert Todd Carroll (2011)49

We often try hard to be coldly rational and logical. It is difficult and requires concentration and time. The actual use of formal logic is a product of philosophy, and requires quite a period of institutional training to accomplish. By writing down assumptions, relationships and factoids and working them out step-by-step, you can learn what conclusions are warranted - or more frequently - learn which ones are not warranted. But this is a laborious and specialist process. Even the basic steps, such as defining the terms we want to use, is often too difficult. Failure to perform that first step is a "want [failure] of method" and results in many "absurd conclusions", to use the words of Thomas Hobbes in 165174.

It is simply the case that most of our opinions are not based on logical analysis even when we are trying our best to make them so. "Many believe certain [untrue] things because they follow faulty rules of reasoning" writes the skeptic Robert Todd Carroll (2011)2.

2.4. The Worth of Experts and Specialists 75

#experts #intelligence #knowledge #science #specialisms #thinking_errors

Outside of their field of expertise, specialists can believe just as much nonsense as everyone else. In fact, if you are intelligent, it can be even harder to shake-off a crazy idea once it implants itself, because you are better at finding evidence for it. Hence, knowledge of the types of thinking errors becomes very important, but, is rarely researched nor taught in school.

Therefore: It is essential to consult the right kind of specialist, and, to allow that advisor to consult his own sub-specialisms. If unsure, ask a generalist whom you trust to delegate his answer to someone more appropriate. "There are many types of scientists, e.g., physicists, chemists, biologists, physiologists, pharmacologists, and hybrid ones like biochemists and biophysicists, etc. One group often has no clue about what another group is talking about"76 - if someone answers outside of their field, their answer must include a measure of their doubt, else, the source is not trustworthy and is as likely as anyone else to repeat common mistakes49.

“'...tendency among Nobel Prize recipients in science to become enamored of strange ideas or even outright pseudoscience in their later years. I investigated the idea and found an even dozen Nobel Prize winners who have advocated ideas that are absurd - excuse me, are not supported by the scientific evidence. (See www.skepdic.com/nobeldisease.com.)'”

"Unnatural Acts: Critical Thinking, Skepticism, and Science Exposed!" by Robert Todd Carroll (2011)49

For more, see:

2.5. Denialism 77

#denialism #psychology #thinking_errors

Denialism is the automatic, unreasonable and stubborn refusal of evidence or arguments against a belief or fact and is a pathological extreme of status quo bias. It stems from emotional, practical or strategic benefits of holding a particular belief, and its loss would cause too much inner disruption or social disruption for its bearer78; the fear of the personal or social repercussions prevents an honest appraisal of the belief. Examples include where beliefs have been held loudly or boastfully, involve political opinions that have been debated hotly in public, beliefs that stem from emotional or childhood events79,78, or failures of personality where pride, ego and stubbornness cause a refusal to admit past mistakes. There are cases where denialists do not, or no longer, believe, but they continue to uphold the appearance of belief for strategic purposes; they do not truly have the desire to debate, just to assert.

The cause of the denialists' reaction is psychological or strategic, and the cure for it comes from psychological, social or situational effects. Direct argumentation is rarely effective; it's more common for the cause of the denial of reality to dissipate quietly.

For more, see:

- " Denialism" by Vexen Crabtree (2025).

3. Social Errors

“We naturally and instinctively gravitate toward beliefs that make us comfortable or fit in with the beliefs of our families and friends.”

"Unnatural Acts: Critical Thinking, Skepticism, and Science Exposed!" by Robert Todd Carroll (2011)2

3.1. Story Telling and Second-Hand Information

#beliefs #buddhism #causes_of_religion #myths #religion #stories #subjectivism #thinking_errors

We humans have a set of instinctive behaviours when it comes to telling stories; we naturally embellish, exaggerate, cover up doubts about the story and add dramatic and cliff-edge moments. We do this because we are genetically programmed to try to tell good stories, which draw attention to ourselves80. These subconscious side-effects of our social instincts have a downside: we are poor at accurately transmitting stories even when we want to.81. All the major world religions went through periods of oral transmission of their founding stories, and the longer this state persisted the more variant the stories became82. Hundreds of years of oral tradition in Buddhism led to communities in different regions thinking that the Buddha gained enlightenment in the 5th century BCE, the 8th, the 9th or even in the 11th century BCE and each community thinks it has received the correct information through its oral transmission83. A scientific study of Balkan bards who have memorized "traditional epics rivaling the Iliad in length show that they do not in fact retain and repeat the same material verbatim but rather create a new version each time they perform it" based around a limited number of memorized elements84. A sign of untrustworthiness is that as stories spread they often become marvellous, more sure of themselves, more fantastic and, more detailed rather than less85.

For more, see:

Once false stories are told widely, they are very difficult to counter86. Researcher Benjamin Radford uses the Bell Witch Mystery as an example: A made-up history was published in a 1894 book "An Authenticated History of the Famous Bell Witch" by Martin Van Guren Ingram. Historians from the 1930s onwards have found, and loudly let it be known, that all of the researchable claims in that book have turned out to be false. But the book was popular, and people love telling stories like it. But people continue to believe in it simply because facts are boring, scepticism is annoying, and ghost stories are nice to tell, and finally, we tend to wrongly assume that only reasonable stories get told, especially when they're printed86

3.2. It's Impolite to Reject Anecdotes87

Anecdotes are little stories based on personal experience. They form the ordinary part of a layman's argumentation. "This must be true, because once my friend Jack...". It's very impolite, at least it feels so, to reject anecdotes, to dismiss them, or to openly disbelieve them. The problem is, as soon as you start comparing anecdotes, you quickly realize that large numbers of them contradict each other. The only solution is statistical analysis and rational argumentation. At which point, the rhetoric ends and the science starts!

“There are sound reasons for preferring the data from randomized, double-blind, controlled experiments to the data provided by anecdotes. Even well-educated, highly trained experts are subject to many perceptual, affective, and cognitive biases that lead us into error when evaluating personal experiences. [...] All things being equal, the more impersonal and detached we are in evaluating potential causal events, the less likely error becomes.”

"Unnatural Acts: Critical Thinking, Skepticism, and Science Exposed!"

Robert Todd Carroll (2011)88

Anecdotes aren't good enough for science "even if the anecdotes number in the millions and even if the storytellers are Nobel Prize-winning anointed saints"89. Ideas have to be tested statistically and in a way where systemic human error can be controlled for and eliminated.

3.3. Traditions and Customs

As a species, we experienced the world as flat. So sure was everyone, that those who thought otherwise were declared insane! Yet the common-sense view was wrong, and the consensus was eventually changed. The cultural belief was harming the search for truth. Roger Bacon in 1267CE listed the "influence of custom" as his second general cause of human ignorance, and "the opinion of the unlearned crowd" as the third90.

We do not have any consistent or absolute way of approaching what we consider true, however we are capable of telling with certainty when something is false. The Earth is not flat, the Northern Lights are not spiritual in basis, neither are rainbows, the stars or the sun and the moon. Earthquakes are not gods moving underneath the Earth, nor can volcanoes or the weather (probably) be influenced by spirit or totem worship. We are not sure if science has "disproved" souls or God, but they certainly seem less likely than they used to.

We often rely on traditional explanations for events because we simply do not have time to investigate everything ourselves, and, most of the time the pronouncements and findings of experts do not filter down to the general populace. The result is a mixture of messy mass beliefs battling against the top-down conclusions of proper investigations. Status-quo bias and other social factors frequently cause resistance against the conclusions of scientists. For this reason, even such major theories as evolution were sometimes discovered by scientists (in this case, in around the 4th century BCE), and then rejected by society and forgotten until they were re-discovered in a world where society was more ready to accept it.

“Many believe palpably untrue things because they were brought up to believe them and the beliefs have been reinforced by people they admire and respect.”

"Unnatural Acts: Critical Thinking, Skepticism, and Science Exposed!"

Robert Todd Carroll (2011)91

Skeptical and rational thinking is pitted, socially, against the subconscious, sub-literate and sub-intellectual world of mass belief.

3.4. Expectations and Culture

Anthropologists have found that our life experiences, impacting on our beliefs, change from culture to culture and era to era. For example, people have spotted alien UFOs differently across the decades; different regions and cultures have consistently reported different and conflicting sightings. A period of time in the UK saw that UFO reportings were generally furry/fuzzy aliens. Modern UFO reports are of the "grays" kind: thin child-like bodies with enlarged eyes and heads. Different countries report different types of aliens, due to their culture. As culture changes, so does the phenomenon. What does that say about the phenomenon?

Such large-scale change over time casts doubt on the basis of the experience and has been presented (especially in the case of UFOs and demons) as evidence that the phenomenon is self-generated, unreal, so is therefore an unconscious function of the aspirations and expectations of the experiencer.

3.5. Sacred Truths and Dogmas (Religion)

It seems that the chances of us discovering the truth escape most rapidly from us when it comes to the religious truths (dogmas) put forward by organized religious bodies. Here, authority, tradition and psychology all combine to present a formidable wall against the acceptance of new evidence and truths.

“Tell a devout Christian that his wife is cheating on him, or that frozen yogurt can make a man invisible, and he is likely to require as much evidence as anyone else, and will be persuaded only to the extent to which that you give it. Tell him that the book he keeps by his bed was written by an invisible deity who will punish him with fire for eternity if he fails to accept every incredible claim about the universe, and he seems to require no evidence whatsoever.”

"The End of Faith: Religion, Terror and the Future of Reason" by Sam Harris (2006)92

The issue isn't evidence par se, it's the lack of application of critical thinking to religious received truths, because society has placed religious theories into a sacred zone where they cannot be criticized. You can rationally apply physics to debate the pros and cons of string theory and be praised for your diligence in your search for truth, but if you rationally apply the truths of biology against religion, then, many feel you are being not only impolite, but immoral.

That such religion-inspired truths are due more to social factors than to reality can be seen by the highly culture-dependent interpretation of religious experiences.

Religious experiences tend to conform closely to cultural and religious expectations. Village girls in Portugal have visions of the Virgin Mary - not of the Indian goddess Kali. Native Americans on their vision quest see visions of North American animals not of African ones. Thus it would seem that religious experiences, no matter how intense and all-consuming, are subject to constraint by the cultural and religious norms of the person to whom they occur. Another way of looking at this is to say that there can be no such thing as a pure experience. An experience always happens to a person, and that person already has an interpretive framework through which he or she views the world. Thus, experience and interpretation always combine and interpenetrate.”"The Phenomenon Of Religion: A Thematic Approach" by Moojan Momen (1999)93

4. The False and Conflicting Experiences of Mankind

#beliefs #consensus #conspiracy_theorists #delusion #epistemology #expectations #experiences #god #illusion #psychosis #religion #subjectivism #supernatural

There are many neurological and physiological causes of odd experiences in our lives. These range from ordinary tricks of the eye94 through to repeated minor epileptic fits that cause nothing more than visual (or aural) hallucinations combined with emotional cues. Many supernatural experiences can be artificially induced through neural electro-stimulation, proving their mundane basis95. Strange and unusual experiences often give rise to strange and unusual beliefs96,97 especially for those people are not inclined towards finding natural explanations for events. Experiments have found that a person's "psychological tendencies may also be used to predict exact types of 'unexplained' phenomena in which they are likely to believe" and that those who display signs of dissociation are likely to see "a given stimulus item as a paranormal creature, whether Bigfoot or an alien"98.

For example during night terrors, our brain is still suppressing bodily movement and it feels we're being forced down on to the bed, complete with much anxiety and fear. Some people have such attacks multiple times. The belief that this is a demonic attack seems natural to many and even after explaining the physiological and natural causes of night terrors, many continue to believe in a supernatural source. Of course, this is ridiculed by those who know that such attacks are really alien investigations of the Human body. Both explanations are foiled however by neuroscientists who understand that the cause of these experiences is biochemical in nature.

Cultural expectations play a large part in the interpretation of personal events, meaning that the resultant beliefs vary according to geographical region. We all find rational arguments to explain away those who experience things that contradict our own interpretation of reality - in secret, when we find people who have experienced things that we don't believe in, we all think we can explain others' experiences better than they themselves can explain them. Investigations into the physiological causes of strange beliefs comes from an ancient line of skepticism. In "The Supernatural?" by Lionel A. Weatherley (1891)99 the author intelligently outlines many such investigations - and that was well before modern neurology started making its amazing strides towards understanding the sources of experiences. Despite this knowledge, the masses remain generally unconvinced, and stick to ages-old cultural and subjective beliefs. The Christian Pentecostals have a saying, "the man with an experience is never at the mercy of the man with a doctrine"100, meaning that rationality subverts itself to experience. In total, we cannot entirely trust our experiences nor those of others, and more careful investigation is needed by all.

For more, see:

5. Commentaries

5.1. Roger Bacon (1214-1294): Four General Causes of Ignorance

Roger Bacon (1214-1294) was concerned with science and the gathering of knowledge but he suffered many restrictions and punishments from his Church, and the world had to wait another few hundred years before Francis Bacon could exert a greater influence in the same direction. In Opus Majus Roger Bacon says there are four general causes of human ignorance. All of them are social in nature.

“First, the example of frail and unsuited authority. [...] Second, the influence of custom. Third, the opinion of the unlearned crowd. [...] Fourth, the concealment of one's ignorance in a display of apparent wisdom. From these plagues, of which the fourth is the worst, spring all human evils.”

"History of Western Philosophy" by Bertrand Russell (1946)90

Although I doubt that all human evils stem from those elements alone, it is certainly worth thinking carefully about those four sources of misinformation. As social animals, our beliefs are often functions of our communities, and our belief-forming and belief-espousing mechanisms are deeply tied to the instinctual politics of social positions and consensus rather than to evidence-based analysis.

5.2. Francis Bacon (1561-1626): Four Idols of the Mind

Francis Bacon's four idols of the mind is one of the most influential parts of his work17. In the words of Bertrand Russell, these idols are the "bad habits of mind that cause people to fall into error". E O Wilson describes them as being the causes of fallacy "into which undisciplined thinkers most easily fall"101. To view the world as it truthfully is, said Bacon, you must avoid these errors. Although they are categorized somewhat different nowadays they have been affirmed time and time again by modern cognitive psychologists as the causes of human errors:

Idols of the tribe: "are those that are inherent in human nature"17 so this includes much of what is discussed on this page as the cognitive sources of errors in human thinking. Bacon mentions in particular the habit of expecting more order in natural phenomena than is actually to be found. This is called pareidolia, discussed above. I suspect Bacon calls this error a 'tribal' one because of the preponderance of magical rituals and beliefs that are based around controlling nature through specific actions; when in reality there is no cause and effect between them. Hence, pareidolia: the seeing of patterns in random, complex or ambiguous data where there are no patterns, and that these misperceived correlations form the basis of superstitious, magical and religious behaviour.

Idols of the imprisoning cave: "personal prejudices, characteristic of the particular investigator"17, "the idiosyncrasies of individual belief and passion"101.

Idols of the marketplace: "the power of mere words to induce belief in nonexistent things"101. Charisma, confidence and enthusiasm are three things that lead us to believe what someone is telling us, even though word of mouth is the poorest method to propagate fact.

Idols of the theatre: "unquestioning acceptance of philosophical beliefs and misleading demonstrations"101. This is us being convinced too easily by confidence individuals, rather than by wide engagement with specialists and specialist bodies. Too many mavericks excel at convincing others. The lack of doubt we have in them, and in our own opinions, damages our approach to truth. This is broadly addressed here: Why Question Beliefs? Dangers of Placing Ideas Beyond Doubt, and Advantages of Freethought.

Francis Bacon is cited by many as one of the important founders of the general idea of the scientific method, which is largely concerned with overcoming errors in human thought and perception.

6. The Prevention of Errors: Science and Skepticism

#critical_thinking #epistemology #philosophy #skepticisim #thinking_errors

Skepticism is an approach to understanding the world where evidence, balance and rationality come first: anecdotal stories and personal experience are not automatically accepted as reliable indicators of truth. There are a great many ways in which we can come to faulty conclusions - often based on faulty perceptions - given our imperfect knowledge of the world around us. With practice, we can all become better thinkers through developing our critical-thinking and skeptical skills73. Facts must be checked, especially if they contradict established and stable theories that do have evidence behind them51,102. Thinking carefully, slowly and methodically is a proven method of coming to more sensible conclusions103,4,104. It is difficult to change our minds openly, 38,40,41,50 but doing so when evidence requires honours the truth and encourages others. Skepticism is 'realist' in that it starts with the basic premise that there is a single absolute reality105, that we can best try to understand collectively through the scientific method. Skepticism demands that truth is taken seriously, approached carefully, investigated thoroughly and checked repeatedly.

For more, see: